The Real Reason AI Platforms Don’t Publish Manuals

This isn’t a critique of users or platforms. It’s simply an observation about what people do when they’re handed powerful systems that don’t fully explain themselves.

One of the more interesting things about modern AI tools isn’t the output itself. It’s the culture that forms around how people think the tools work.

Spend time in almost any AI related community and you’ll see the same patterns repeat. Certain symbols are said to unlock better results. Specific ordering supposedly forces outcomes. A handful of words get treated like cheat codes.

I’ve fallen for this myself. More than once.

When something worked, I wanted a reason that felt repeatable. Preferably something I could point to, name, and reuse. It took longer than I’d like to admit to realize that what I was reacting to wasn’t a hidden mechanic at all, but simply the absence of conflicting instructions.

That instinct isn’t unusual. It’s human.

When there’s no manual, communities fill the gap

If AI platforms released precise, technical documentation explaining exactly how prompts are interpreted, a lot of folklore would disappear overnight. Instead, users are left to experiment, compare notes, and draw conclusions from inconsistent results.

What follows is predictable.

Experimentation starts to feel like discovery.

Small wins feel like mastery.

Patterns harden into rules.

Those rules turn into insider knowledge.

What emerges isn’t documentation. It’s folklore.

You see this anywhere outcomes are probabilistic and feedback is noisy. Language models, image generators, SEO tools, trading systems, even audio software. Humans don’t tolerate ambiguity very well. When systems won’t explain themselves, we explain them to each other.

Why tricks feel real, even when they aren’t magic

A lot of attention gets paid to formatting choices, delimiters, or prompt structure. People swear one symbol works better than another, or that ordering alone changes everything. And sometimes, it really does seem to.

But that doesn’t make the symbol special.

Most of the time what’s actually happening is simpler than people want it to be. Fewer competing instructions. Clearer grouping of ideas. Stronger emphasis on what comes first.

In short, clarity beats chaos.

Structure matters. Priority matters. Constraints matter.

The symbol just happens to be the most visible part of the process, which also makes it the easiest thing to mythologize. Structure is boring to explain. A “secret operator” is not.

At some point, “prompt engineering” stops being engineering and starts looking a lot like superstition with better branding. That line might sound harsh, but if you’ve watched people defend tricks long after they stop working, it’s hard to unsee.

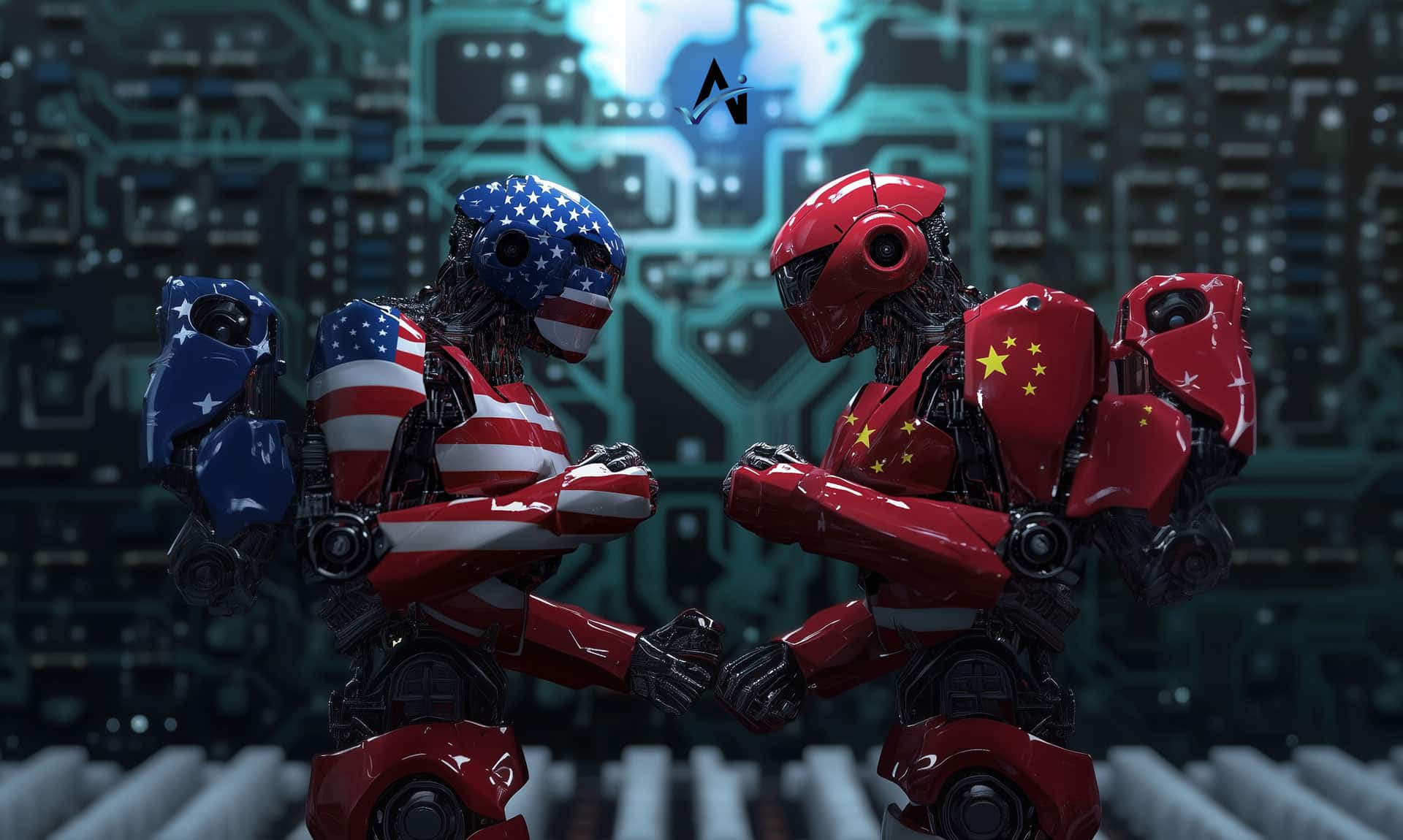

Why platforms stay vague on purpose

From a product standpoint, publishing a true instruction manual would create real problems.

- It would invite systematic prompt gaming.

- It would narrow creative outcomes.

- It would generate endless support disputes that start with “your guide says X.”

- It would lock platforms into promises probabilistic systems can’t reliably keep.

But there’s another reason that rarely gets talked about.

Manuals create liability

The moment an AI company publishes a detailed explanation of how its system works, it isn’t just educating users. It’s creating evidence.

In industries like music, art, writing, and code, that matters.

If a platform explicitly documents how prompts influence structure, style, or output similarity, it risks giving entire industries a clearer legal argument. Not speculation. Not inference. Documentation.

A manual can become a roadmap for lawsuits.

Music generation is the obvious example. The more transparent a system is about how it produces songs that resemble genre, style, or structure, the easier it becomes for rights holders to argue intent, replication, or substitution.

Vagueness is not just a creative choice. It’s a legal one.

“Creative systems are non deterministic” isn’t only a technical truth. It’s a protective boundary. It avoids hard claims about causality, influence, or repeatability that could be challenged in court.

From that perspective, ambiguity isn’t a bug. It’s risk management.

The psychology behind “secret sauce”

What keeps these myths alive isn’t ignorance. It’s control.

Believing you’ve found a trick reduces uncertainty. It creates a sense of progress. It signals insider status. And it delivers an emotional payoff even when results are coincidental.

When something works, we credit the most recent change. When it doesn’t, we quietly drop the rule and move on. Over time, only the success stories stay visible.

That isn’t stupidity. It’s pattern recognition doing what it has always done, sometimes a little too eagerly.

The business of tips, tricks, and insider knowledge

There’s another reason these myths persist. They make money.

Entire micro economies exist around AI tips, hacks, and prompt secrets. Blog posts, YouTube channels, newsletters, and courses can all generate real income by promising shortcuts. Often the advice underneath is reasonable. It’s the framing that does the work.

Content that says “be clear and intentional” doesn’t spread very far.

Content that says “use THIS to unlock better results” spreads fast.

Mystery converts better than nuance. That’s not a moral judgment. It’s just marketing.

As long as AI systems remain opaque, there will be demand for people who claim to have decoded them. Some of them make a very good living doing so.

If this feels familiar, it’s probably because you’ve seen headlines like these before.

- “This ONE Prompt Trick Instantly Improves AI Results”

- “Why 99% of Users Prompt AI Wrong”

- “Stop Using AI Until You Know This Secret”

- “The Hidden Syntax AI Doesn’t Want You to Know”

- “I Tested 100 Prompts. This Format Wins Every Time”

- “This Symbol Changed Everything”

No names required. The pattern is obvious.

Operators and recipe followers

Over time, a quiet divide forms in communities built around opaque systems.

Some people collect tricks. They copy templates. They chase whatever insight is trending that week.

Others test assumptions. They watch what actually changes outcomes. They understand why something might work today and fail tomorrow.

The difference isn’t intelligence. It’s orientation.

Operators don’t reject tricks. They just don’t depend on them. They know shortcuts expire. Principles don’t.

The real takeaway

AI tools don’t need secret sauce.

Language itself is the interface.

The most reliable improvements don’t come from hacks or symbols. They come from fewer conflicting instructions, intentional prioritization, clear constraints, and realistic expectations.

The best users don’t memorize tricks.

They understand why tricks sometimes work, and why they sometimes stop.

That understanding lasts longer than any tip or trick ever will.

latest video

Get Our Newsletter

Never miss an insight!